INQ (Incremental Network Quantization)

Summary of "Incremental Network Qunatization: Towards Lossless CNNs with Low-Precision Weights"

1. Problem

- Deep CNN requires heavy burdens on the memory and other computational resources- Reducing the heavy burdens could cause the Performance Loss (accuracy loss)

2. Solution

- INQ (Incremental Network Quantization) :Convert "Any Pre-Trained Full-Precision CNN" into "Low-Precision Version (weights are constrained to be either powers of two or zero)"

3. How

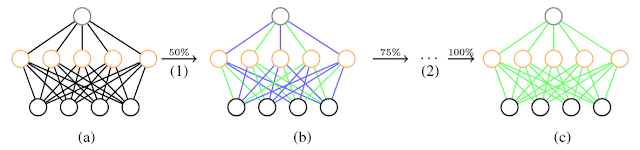

- three interdependent operations1) Weight Partition

- Divide the weights in each layer of a pre-trained CNN model into two disjoint groups- Weights in the first group

+ Responsible to form a low-precision base,

+ Thus, Quantized by a variable-length encoding method

- Weights in the second group

+ Responsible to compensate for the accuracy loss from the quantization

+ Thus, Retrained

2) Group-Wise Quantization

- Above two operations can be formulated into following joint optimization form :

- By employing SGD (Stochastic Gradient Decent) method, the updating scheme is :

3) Re-Training

- Above Operations are Repeated until All Weights are converted into Low-Precision Ones

- Overall Procedure of INQ

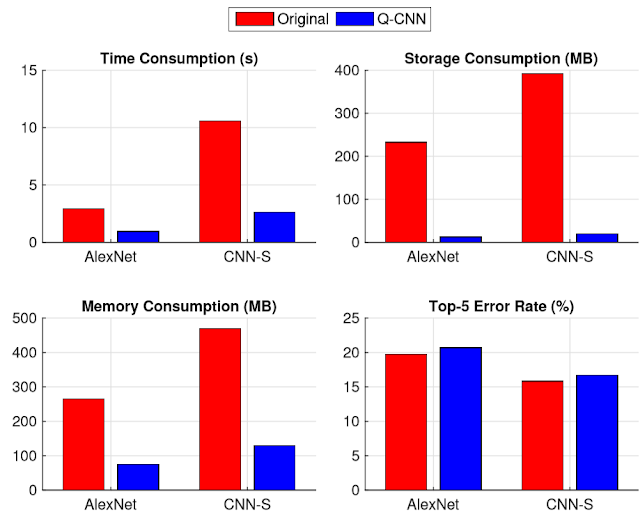

4. Performance

- 5 bit quantization has improved accuracy than 32 bit floating point reference

+ Variable Length Encoding

+ 1 bit for representing zero value

+ 4 bit for at most 16 different values for the powers of two)

- Combination of network pruning and INQ shows impressive results

댓글

댓글 쓰기